Xperience Digital Engineering

Web, Data, And AI : Igniting Your Digital Potential

Established in 2001, we are a group of passionate technologists with a strong focus on excellence, and commitment to providing high quality, cost-effective solutions to our clients.

Our team of experts will closely work with you at every stage of the project to deliver exceptional,tailor-made solution that fits your budget and business needs.We mitigate the risks associated with offshore software development while assuring transparent and open communication.

Retail

Engaging Brand Experiences To Strengthen Your Customer Relationships

Technology & Media

Driving Digital Transformation Across Strategy, Sales, and Products.

Finance

Modern Systems to Address Regulatory Demands, Compliance

Consumer Goods

Predict inventory needs, improve customer targeting, and meet market demands with AI.

Our Services

Transform Your Vision Into Reality

Web

Driving digital transformation for two decades: Harnessing product engineering, solution engineering, AWS capabilities, and DevOps strategies to redefine possibilities and deliver tailored, innovative solutions.

Data Engineering

Empowering data-driven decisions since 2016: Crafting bespoke analytics ecosystems, from enterprise data warehouses to ML-enhanced data lakehouses, leveraging open-source innovation and state-of-the-art MLOps.

Our Strategic Partners

We leverage cutting-edge platforms to drive innovative digital transformations

Success Stories

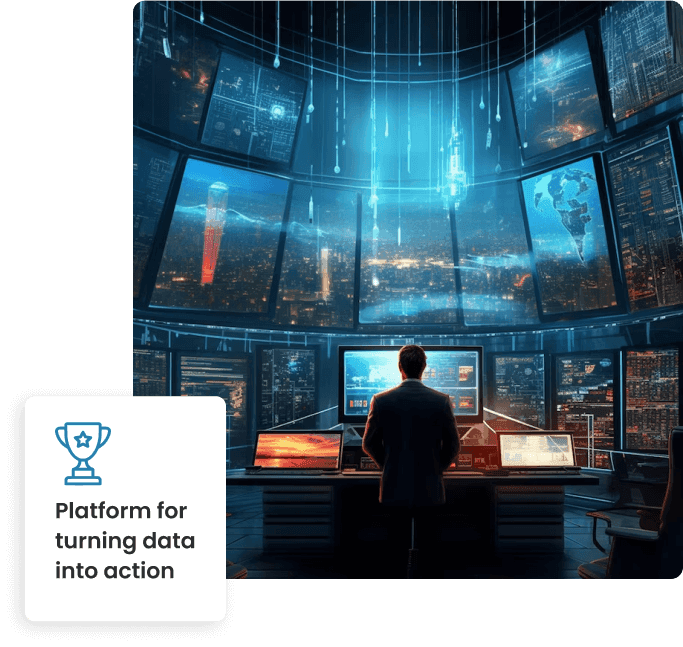

LENS

Lens is a unified data platform that enables you to leverage the power of big data to drive business decisions.

Lens offers a robust distributed architecture built on the Amazon Web Services (AWS) ecosystem with a wide range of analytical capabilities that can scale to satisfy your business requirements.

Lens Dashboard, a highly visual, information-rich analytics dashboard built on the Lens platform, enables you to compare key metrics, create custom reports/interactive dashboards, set business goals and provide user recommendations.

Success Stories

BuildReq

BuildReq, your comprehensive solution for construction procurement management. Developed by industry experts with over 23 years of experience,

BuildReq is designed to optimize and simplify every aspect of the procurement lifecycle for construction projects. Whether you're managing a small-scale development or a large infrastructure project, BuildReq empowers construction companies, project managers, and procurement teams with a centralized, collaborative workspace to efficiently handle vendors, materials, quotations, and purchase orders. With BuildReq, streamline your processes, enhance collaboration, and ensure the success of your projects.

Success Stories

Factoring Solution

Blockchain-Enabled Factoring for the Digital Age

We have developed a blockchain enabled factoring solution which can reduce the significant risks faced by factoring agents when providing financial assistance to manufacturers.